Value Iteration in real world

n real world, we don’t have the state transition probability distribution or the reward function. You may try sampling them, but you will never know the exact probabilities of them. As the result, you can not compute the expectation of the action values. We want a new algorithm that would get rid of this probability distribution. The new algorithm will only use samples from the environment.

So in the real world, there are 2 things impossible to do in Value Iteration:

- You can not compute the maximum over all possible actions. Because you can not take all actions and go back in time.

- You can not compute the expectation of value function of next state. Because in real life, you’re going to see one outcome, one possible result.

Model-free settings

In model-free settings, you train from “trajectories” instead of all states and actions. Trajectories basically are history of your agent – a set of states, actions, rewards. Once we got some trajectories, we somehow use them to train our algorithm. We prefer to train / learn action-value function q, instead of the state-value function V. Because V is useless without probabilities distribution p.

Monte Carlo

The method is to sample the trajectories, and then average over all the trajectories concerning a particular state. This method does work technically, but the statistics says it is not the best estimate, and it will converge, provided you give lots of samples, but this amount of samples might not be realistic. There is a better way than this.

Temporal Difference

We can improve action value function Q(s, a) iteratively. That is we can exploit the recurrent formula for Q value – use your current maximum of these estimation of Q values, to refine a Q value at a different state. One choice is using moving average with just one sample. To train via Q learning to perform one elementary update, you only need a small section of the trajectory: a state, an action, a reward and next state.

Exploration and exploitation

Something between using what you learned and trying to find something even better, but you have guarantees of convergence. The simplest exploration is so-called epsilon greedy exploration, whose uniform probability distribution has some drawback. Softmax strategy is also useful, which is trying to make Q(s, a) value into some kinds of probabilities. The huge problem of these 2 strategies is that you need to gradually reduce exploration, if you want to converge to optimal policy.

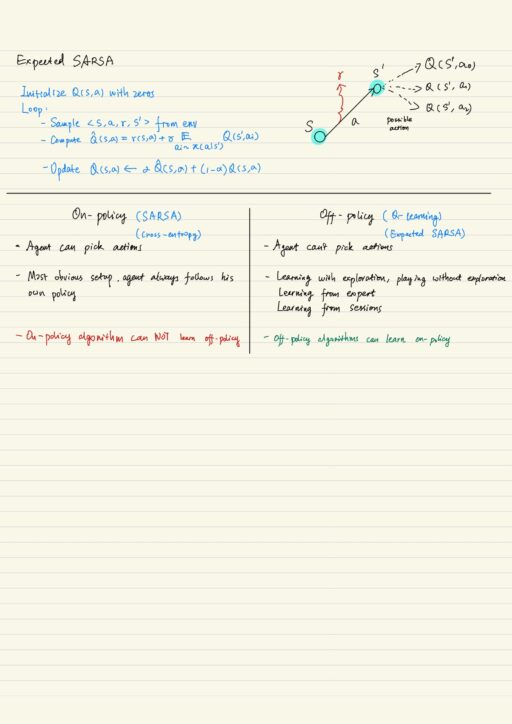

But exploration does not always work. It sometimes fails Q-learning from maximizing rewards, for example: Cliff world. The decision must account for actual policy (e.g. epsilon greedy) to avoid regions where exploration is most likely to be detrimental. SARSA comes to the rescue, in which the maximum of next Q-values is replaced by expectation of next Q-values. The expectation should be done over the probability denoted by epsilon-greedy exploration policy.

On-policy and off-policy

On-policy algorithms (e.g. SARSA) assume that their experience comes from the agent itself, get optimal strategies as soon as possible. Off-policy algorithms (e.g. Q-learning) don’t assume that the experience obtained is going to be used / applied to the actual problem. On possible situation is that an agent was trained with epsilon based or Boltzmann based exploration, and then this exploration goes away and it gets to pick optimal actions all the time. Another possible situation is to train your agent on a policy which is different from its current policy. For example: pre-train a self-driving car NOT on its own actions, but on actions of an actual human driver. Expected SARSA can be either on-policy or off-policy, which depends on the value of epsilon.

Experience replay

The idea is to store serval past interactions and train on random subsamples. The agent plays 1 step and record it, then pick N random transitions to train. This is helpful when taking action is expensive, you don’t need to re-visit the same state-action pair many times to learn it. The replay buffer only works with off-policy algorithms.

My Certificate

For more on model-free reinforcement learning, please refer to the wonderful course here https://www.coursera.org/learn/practical-rl/

Related Quick Recap

I am Kesler Zhu, thank you for visiting. Check out all of my course reviews at https://KZHU.ai