A vector space consists of a set of vectors (column matrices) and a set of scalars (real numbers), which is closed under vector addition and scalar multiplication. For example, assuming u, v are 3-by-1 matrices; a, b are real numbers; w = a u + b v is a 3-by-1 matrix.

Linear independence

A set of vectors {u1, u2, …, un} is linearly independent if the equation c1 u1 + c2 u2 + … + cn un = 0 has only one solution c1 = c2 = … = cn = 0. It means you can not write any vectors in the set as a linear combination of other vectors in the set.

If you are given a set of vectors, you can generate a vector space by just forming all linear combination of that set of vectors, then we say that set of vectors span that vector space. Basis of a vector space is a set of minimum number of vectors that span the space. The dimension of a vector space is the number of basis vectors that are unique.

Gram-Schmidt process

Suppose we have a basis {v1, v2, …, vn} for a vector space. The ordinary basis vectors may be neither orthogonal with each other nor normalized. The Gram-Schmidt process then give us a algorithm for converting the ordinary basis to orthonormal basis {u1, u2, …, un}. The algorithm consists of 2 steps:

- Find an orthogonal basis

- Normalize

Null Space

Null space of a matrix A is a vector space of all column vectors x, such that A x = 0. Since null space is a vector space, we would like to find its basis. The basis can span the null space, it also tells us the dimension of the null space. The reduced row echelon form of matrix A will help us.

rref(A) x = 0 is the same to A x = 0rref(A) discloses the relationship between component xi and other components, say:

x1 = c12 x2 + c13 x3 + ... + c1n xn

x2 = c21 x1 + c23 x3 + ... + c2n xn

...

xn = cn1 x1 + cn2 x2 + ... + cn n-1 xn-1Using the equations above and factoring out xi, we could get the basis which consists of cij. The variable xi associated with pivot columns are called basic variables, others are called free variables.

One can use null space to determine the general solution (not a unique solution) of an under-determined system of equations A x = b, where there are fewer equations than unknowns, i.e. number of rows of matrix A < number of columns of the matrix A.

- Let u be a general vector of null space of matrix A. The u will be a linear combination of basis vectors of null space of A.

- Let v be any vector that solves the equation A x = b.

The general solution to the A x = b will be sum of u and v, i.e. x = u + v.

A x = A (u + v) = A u + A v = 0 + b = bBecause null space could have a large dimension, then the solution could have a big range of possible solutions.

Column Space

Column space is the vector space that is spanned by the columns of a matrix. Multiplication of a matrix by a column vector is just the linear combination of the column of the matrix. When we write A x = b, and assume there is a solution, then b (i.e. A x) has to be in the column space of the matrix A. We want to find the basis of column space Col(A) and its dimension.

The process of row reduction (reduced row echelon form) does not change the linear dependence relationship between the columns. Column space is NOT spanned by the pivot columns of rref(A), but by the corresponding columns in the original matrix A. So the overall procedure is:

- Find rref(A).

- Find the columns in original matrix corresponding the pivot columns in rref(A). This is the basis of Col(A).

- The dimension of Col(A) is equal to the number pivot columns of rref(A).

Row Space

The vector space spanned by the rows of a matrix is called row space, but another way of looking that is that is the column space of the transpose of the matrix.

Row(A) = Col(AT)Left Null Space

Left null space of a matrix A is a vector space of all column vectors x, such that xT A = 0. It is just another way of viewing the whole equation transposed. So it is the vector x such that AT x = 0.

LeftNull(A) = Null(AT)Relationship between fundamental subspaces

For a matrix A of size m by n:

| Subspace | Subspace of all … matrices | Dimension |

| Null(A) | n by 1 | # of non-pivot columns of rref(A) |

| Col(A) | m by 1 | # of pivot columns of rref(A) |

| Row(A) | n by 1 | # of pivot columns of rref(A) |

| LeftNull(A) | m by 1 |

- Null(A) and Row(A) are orthogonal complements. Because A Null(A) = 0, rows of A are orthogonal to the null space Null(A). So the vectors in row space are orthogonal to the vectors in null space.

- Rank of the matrix is the linearly independent columns that a matrix has., it also equals to the linearly independent rows that a matrix has.

Rank(A) = the dimension of Row(A) = the dimension of Col(A)Orthogonal Projections

We have a vector v in a big vector space V, we want to project it down into a subspace W of the original vector space V. The reason we want to do this is the new projected vector vproj_W is closest to v in W, which is useful to solve some problem.

Least Squares Problem

Usually in statistics, you find the distance between each of the points and the line you may draw, and you square the distance and sum them up, and then you try to find the line that minimize the sum.

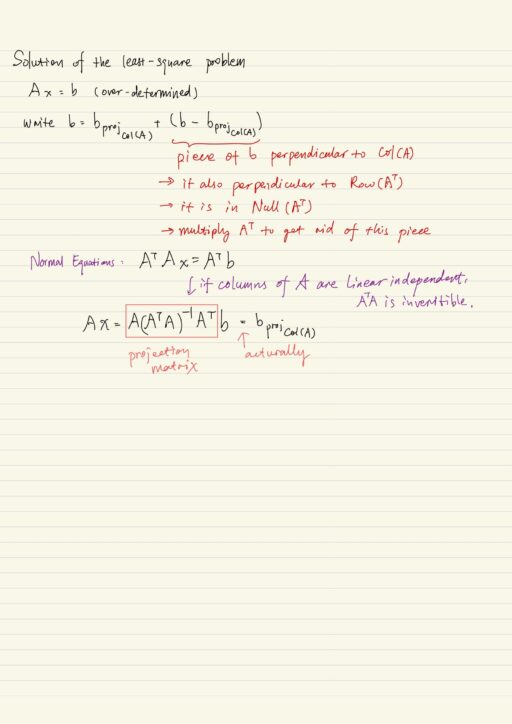

But from the perspective of matrices, we construct the least squares problem in the A x = b form. There are many more equations than there are unknowns, so it is over-determined. If A x = b is over determined, that means b is not in the column space of a. (Recall that column space is the vector space that is spanned by the columns of a matrix.) So there is no solution for the unknown x in the equation A x = b.

But to find the best solution for this equation, we can project b onto the column space of A. So, we will solve

A x = bproj_Col(A)Normal equation is the key to solve it:

AT A x = AT b

My Certificate

For more on Vector Space & Fundamental Subspaces, please refer to the wonderful course here https://www.coursera.org/learn/matrix-algebra-engineers

Related Quick Recap

I am Kesler Zhu, thank you for visiting my website. Check out more course reviews at https://KZHU.ai