Reward

That all of what we mean by goals and purposes can be well thought of as maximization of the expected value of the cumulative sum of a received scalar signal (reward).

R. Sutton

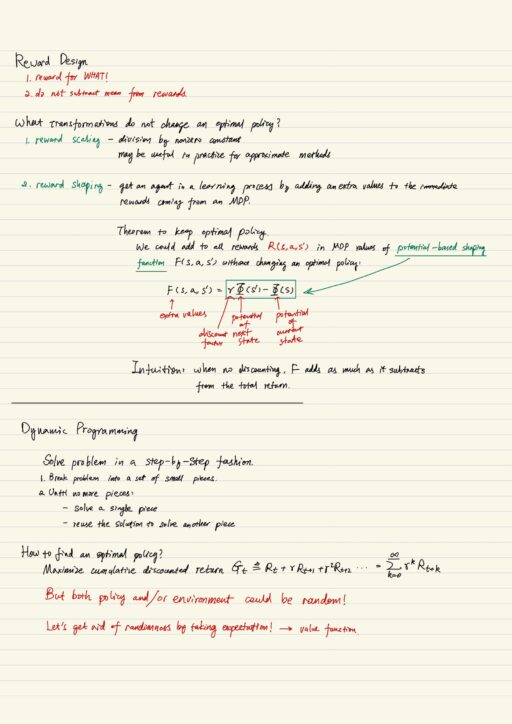

This signal is ‘reward’, and sum of the signals is ‘return’. Each immediate reward depends on the agent action and environment reaction to this action. How do we deal with infinite return? One of the most common approaches is Reward Discounting. The reason of discounting is partially mathematical convenience and partially inspiration from human behavior. It makes infinite sums finite and allows us to express the return in a recurrent fashion. Reward only for ‘what’, never for ‘how’. And do not subtract anything from rewards. Reward scaling (division by nonzero constant) may be useful in practice for approximation methods.

Optimal policy and Bellman Equations

First of all, what is Dynamic Programming? It is a method solving complex problem in a step-by-step fashion. It breaks the problem into a set of smaller pieces, and solve the next piece using all the results of already solved pieces.

How to find optimal policy? Maximize cumulative discounted return! The return may be random. How to handle the randomness? One of the most innovative approaches is by the means of taking an expectation. This approach corresponds to the notion of (state-)value function and action-value function. The decomposition of these functions is important that it owns a name: “Bellman expectation equations”.

How to compare policy? One natural measure is how much of reward could the policy get. We say that policy π is greater than or equal to π’, if and only if, the value function of π is greater or equal to the value function of π’, for each and every state. This naturally yields the definition of the optimal policy π*, which is greater than or equal to any other possible policy. Additionally, there is a beautiful theorem stating that, in any finite Markov Decision Process, there exists at least one deterministic policy.

The best policy defines the optimal (state-)value function and optimal action-value function. The recursive computation from of these 2 functions have their own name known as “Bellman optimality equations”.

All of the things described above is basically the Dynamic Programming in action.

Policy evaluation & improvement

There are 2 approaches of finding optimal policy: model-based and value-based.

- Model-based: known model of the world, and we know all probabilities of rewards and next state, given current state and action.

- Value-based: estimate a value and extract a policy from that value.

Policy evaluation predicts value function for a particular policy. For each state we associate value function of the state under a policy. Bellman expectation equation is useful here, which is basically a system of linear equations (where number of unknowns = number of equations = number of states). It is sufficient for us to obtain an approximate solution.

Once we know what is the value for a state, we could improve the policy by acting greedily with regard to the value.It is to say: Improve policy by acting greedily with respect to the value function.

Generalized policy iteration

Generalized policy iteration means interleaving policy evaluation and policy improvement, and eventually make the policy the optimal one. In this procedure, policy evaluation and policy improvement are both competing and cooperating. Evaluation makes it no longer greedy w.r.t. its value function, meanwhile improvement makes it greedy (we change value function). It eventually drives a policy to be an optimal one.

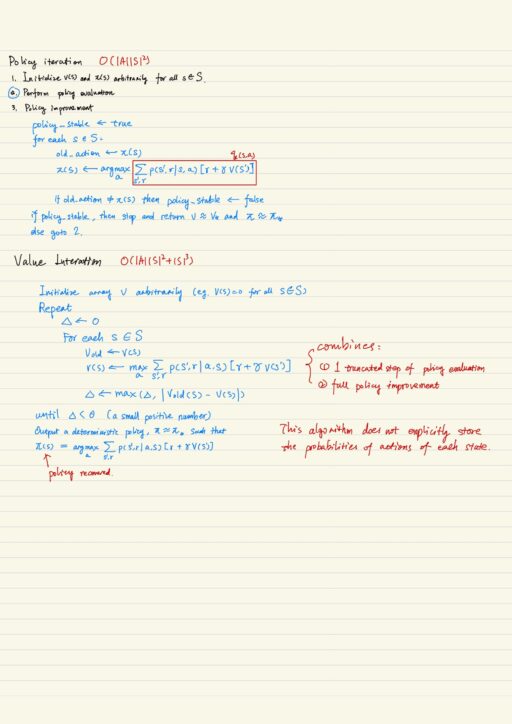

- Policy Iteration: Evaluate policy until convergence (with some tolerance), and then improve policy.

- Value Iteration: Evaluate policy only with single iteration, and then improve policy.

My Certificate

For more on Dynamic Programming in RL, please refer to the wonderful course here

https://www.coursera.org/learn/practical-rl

Related Quick Recap

I am Kesler Zhu, thank you for visiting. Check out all of my course reviews at https://KZHU.ai