Practical Reinforcement LearningHigher School of Economics I am very proud that I survived and completed this thorny but exciting journey with Honors. I spent nearly 1 month on this very challenging course, which covers enormous amount of knowledge. The lecture videos are all well-prepared, they not only help me consolidate my original understanding, but also…

Tag: Practical Reinforcement Learning

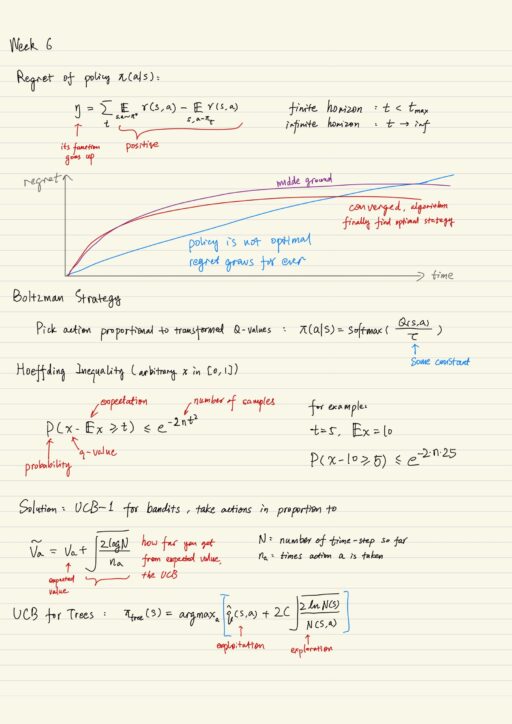

Exploration and Planning in Reinforcement Learning

Exploration is needed to find unknown actions which lead to very large rewards. Most of the reinforcement learning algorithms share one problem: they learn by trying different actions and seeing which works better. We can use a few made-up heuristics (e.g. epsilon-greedy exploration) to mitigate the problem and speed up the learning process. Multi-armed bandits…

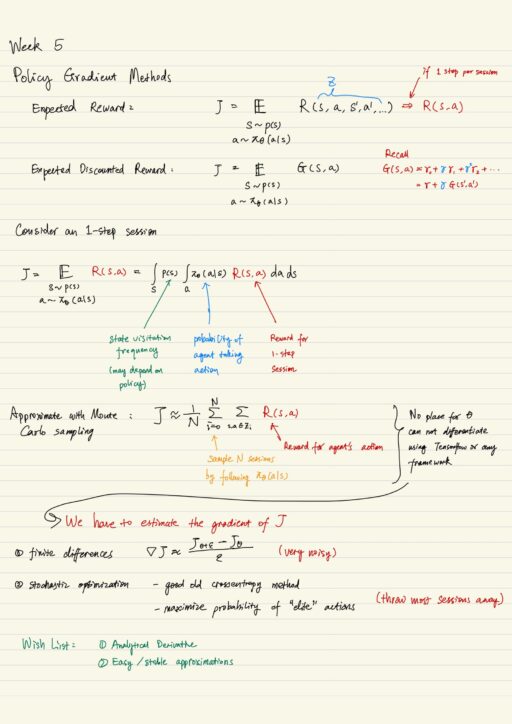

Reinforcement Learning: Policy Gradient Methods

The problems of value-based methods The idea behind value-based reinforcement learning (say, Q-learning) is to find an optimal action, in a state, based on how much discounted reward you will get, by following a policy. The first problem here is value-based methods do not explicit learn “what to do”, instead it learns “what kind of…

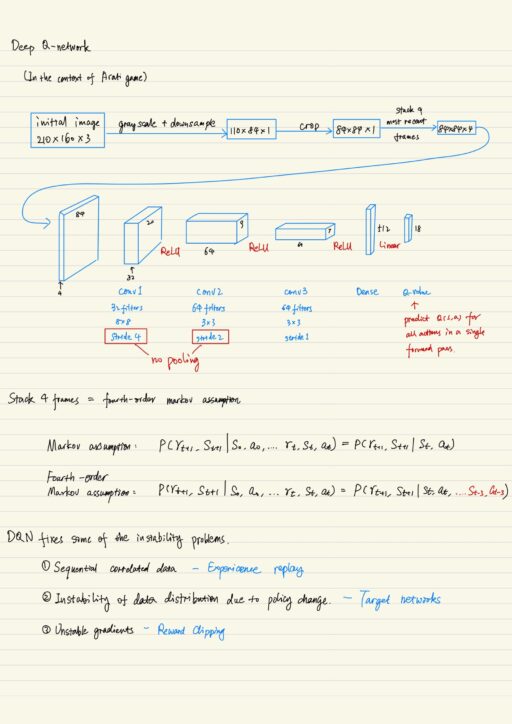

Deep Q-Network in Reinforcement Learning

Deep Q-Network (DQN) is the first successful application of learning, both directly from raw visual inputs as humans do and in a wide variety of environments. It contains deep convolutional network without hand-designed features. DQN is actually no more than standard Q-learning bundled with stability and epsilon-greedy exploration. In any application, the first thing you…

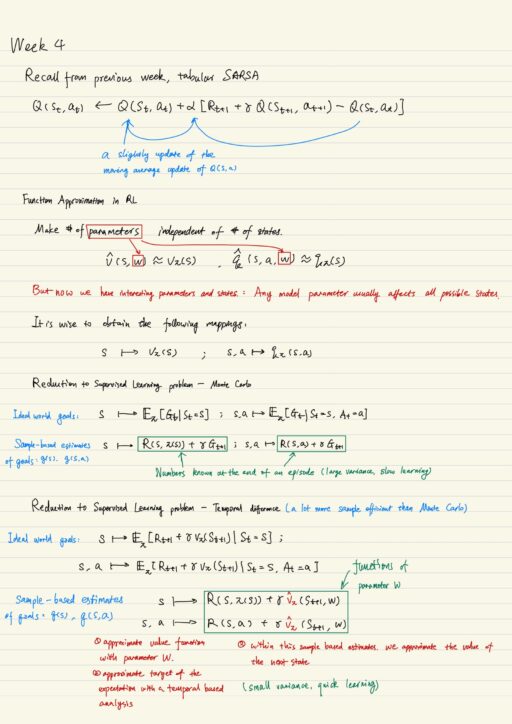

Supervised Learning in Reinforcement Learning

Deduction to supervised learning problem In tabular method, each Q(s, a) could be seen as a parameter. There are more parameters than states, because there are as many parameters for each state as the possible number of actions for each state. There are also situations where states include continuous components. It means we need a…

Model-free Reinforcement Learning

Value Iteration in real world n real world, we don’t have the state transition probability distribution or the reward function. You may try sampling them, but you will never know the exact probabilities of them. As the result, you can not compute the expectation of the action values. We want a new algorithm that would…

Dynamic Programming in RL

Reward That all of what we mean by goals and purposes can be well thought of as maximization of the expected value of the cumulative sum of a received scalar signal (reward). R. Sutton This signal is ‘reward’, and sum of the signals is ‘return’. Each immediate reward depends on the agent action and environment…