The problems of value-based methods

The idea behind value-based reinforcement learning (say, Q-learning) is to find an optimal action, in a state, based on how much discounted reward you will get, by following a policy. The first problem here is value-based methods do not explicit learn “what to do”, instead it learns “what kind of value” or “what kind of function” you will get when you do so. They try to learn value of entire life from this moment to the end, by adding up all possible rewards multiplied with discount ɣ. This is usually impractical and also hard for neural network to approximate.

Another problem of value-based methods is that they depend on the minimization of the loss function (say, mean squared error). But a policy with less mean squared error does not usually mean a “good” policy. A good policy should produce the same action as optimal policy when argmax is used, even though the current action-value is different than the true action-value.

But there is another family of methods trying to avoid learning state value (V) or action value (Q) or any value-based functions. A good example is cross-entropy. Although it has a few drawbacks, but it has neat efficiency in it, it learns complicated environment really simple. It does not try to approximate Q-functions, instead it simply tries to learn what to do, i.e. the probability π(a | s).

Kinds of policy

We need to learn optimal policy, we start from some randomly initialized policy and then we need some algorithm to improve it. There are 2 general ways that the algorithm takes actions:

- Deterministic policy a = π(a | s) – take one state and predict one action or value of action if it is continuous

- Stochastic policy a 〜 π(a | s) – learn a probability distribution for each action

Stochastic policy kinda take care exploration for you. In the case that action space is continuous, we can try some distribution: categorical, normal, mix normal, etc.

Value-based vs Policy-based

| Value-based | 1. Learn value function V(s) or Q(s, a) 2. Infer policy π(a | s) = number of( a = argmaxaQ(s, a) ) |

| Policy-based | 1. Explicitly learn policy π(a | s) or π(s) -> a 2. Implicitly maximize reward over policy |

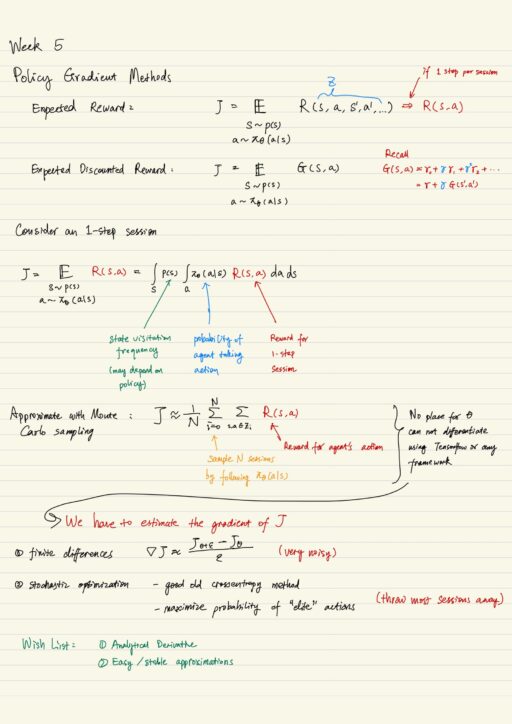

Policy Gradient Methods

Compared with other fancy tricks, we better off just write down what we want to optimize, then follow the derivative via gradient descent or back propagation in neural networks. Consider an 1-step session, we could approximate the total reward J with Monte Carlo sampling. Now the problem is TensorFlow can only compute derivative with respect to something that is in the formula. But in Monte Carlo sampling equation there is no place for θ (the parameters of policy π). Monte Carlo sampling depends on θ only in summation, but it does not remember θ.

We could fix this problem by estimating the gradient of J. There are a few methods, for example: finite differences and stochastic optimization. But the drawback is also obvious:

- They need lots of samples, the number grows exponentially with number of parameters

- Large variance in gradient

- Absence of math soundness

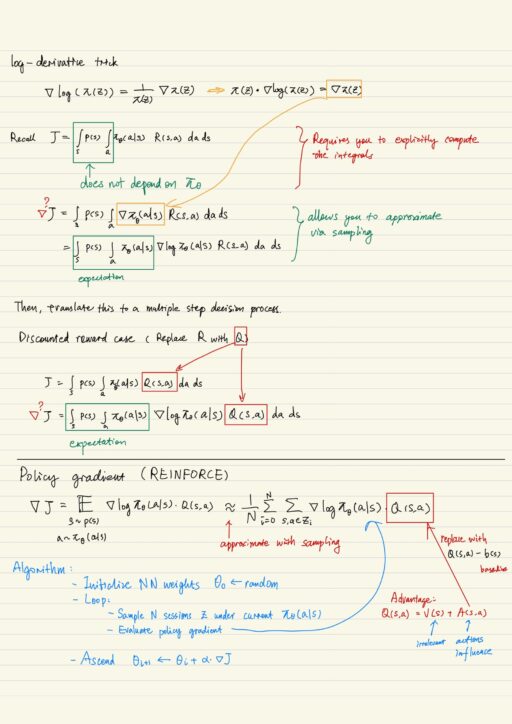

Remember we want to compute derivative of expected reward ∇J. Another method is to use log-derivative trick to derive analytical inference of total reward J. This idea leads to an algorithm called REINFORCE.

REINFORCE

REINFORCE is an on-policy algorithm. About any practical problem with any complexity will suffer a universal problem, which is when you multiply Q values with policy gradients, the easy states (agent does do any thing, but gets lots of rewards) will get up-weighted; on the other side, the difficult states (agent does a lot but merely gets a little rewards) will get down-weighted.

Any improvement in difficult states should be more important than that in easy status, but REINFORCE algorithm kinda stays the opposite. On the contrary you want to encourage your agent NOT to do things just good by themselves (simple tasks with lots of rewards), and encourage agent do good things in comparison with how the agent usually performs.

Any improvement shall be capitalized. So the idea is to reward on improvements (advantages) instead of Q-values only. Advantage is how good your agent performs compared to what it usually does. This can be expressed as baseline function in the equation.

Advantage Actor-Critic

The idea behind actor-critic is that it learns pretty much all it can learn currently, which means it tries approximate value function V and also policy function π. By combining value function and policy function, it can obtain better performance. It actually learns value function V to improve performance of policy training.

We have to train a neural network with 2 outputs: policy and value function. Policy is a layer that has as many units as you have actions, and use softmax to return a valid probability distribution. The value function is just a single unit. Then you have to perform to 2 kinds of updates:

- Update the policy

- suppose value function is good enough

- provide better estimate for the policy gradient

- ascend the policy gradient over neural network parameters

- Refine your value function

- similar to what you have done in DQN

- DQN enhancement does not bring much reward

You simply learn these 2 functions interchangeably:

- Compute the gradient of J (actor, accumulated reward), ascend it.

- Compute the gradient of L (critic, mean squared error of value functions), descend it.

Useful Tips

You can assume the value function based loss (the temporal difference loss) is less important. Actor still learns even if critic is random.

In the case of some actions are completely abandoned, you are no longer receiving samples consisting of these actions in some particular states. If you are sure these actions are useless, it is OK; otherwise you should not completely give up an action. We can prevent this by introducing regularization to policy loss function. You want encourage your agent to increase the entropy in the policy. This basically resulting your agent preferring not to give a zero probability to anything.

If you have parallel sessions, you can parallelize the sampling procedure.

Using policy gradient in deep learning neural network, you have to be careful with logarithm of probability. You may end up with a probability which is almost zero, and logarithm of zero is almost negative infinity. You can mitigate this with logsoftmax.

Value-based vs Policy-based methods

| Value-based methods | Policy-bases methods |

| Q-learning, SARSA, value-iteration | REINFORCE, advantage actor-critic, cross-entropy |

| solve harder problem | solve easier problem |

| explicit exploration | innate exploration and stochasticity |

| evaluate states and actions | easier for continuous action space |

| easier to train off-policy | compatible with supervised learning |

Asynchronous Advantage Actor-Critic

A3C is on-policy, you have to train it on the actions taken under current policy, the old experience in experience replay dose not make sense and is usually restricted. Since we can not use experience replay, we need a new method to mitigate the problem of sessions that are not independent and identically distributed (IID). The new method is parallel session, based on the assumption that if there is enough of them, the sessions will be IID.

You have to spawn N clones of agents, which all use the same set of weights, but play independent in their own environment. They take actions by sampling from the policy independently. Finally if you play long enough, all agents are likely to be in different trajectories and states. These agents and their environments are usually assigned to different processes, since they usually do not interact often.

Another famous usage is A3C with LSTM (Long short-term memory) allowing agent to use some kind of recurrent memory. Several sequential transitions [s, a, r, s’, a’, …, s_k] are minimum amount of information required to perform an iteration of training for A3C with LSTM.

Supervised Pre-training and More

Reinforcement learning usually takes long to find optimal policy if completely from scratch. You can try to initialize the agent with some prior knowledge which can be yielded by supervised learning. If you have a neural network, you can pre-train it on existing data, then you can follow up by using policy gradient. The idea is that the methods for both supervised learning and policy gradient require the similar things.

You basically initialize your policy at random, then you train the policy to maximize the probability of either human sessions or whatever heuristics (not perfect yet, but better than random before). Next you train model in policy-gradient mode to get even better reward.

There is more than 1 way you can define policy gradient:

- Trust Region Policy optimization (policy gradient on steroids)

- Deterministic policy gradient (allows you to do off-policy training for policy gradient methods)

- And much many more

My Certificate

For more on the essentials of Reinforcement Learning: Policy Gradient Methods please refer to the wonderful course here https://www.coursera.org/learn/practical-rl

Related Quick Recap

I am Kesler Zhu, thank you for visiting my website. Checkout more course reviews at https://KZHU.ai