GCP uses a software defined network, that is build on top of a global fiber infrastructure, which makes GCP one of the world’s largest and fastest networks. Thinking about resources as services, rather than as hardware, will help you understand the options that are available and their behavior.

Google Cloud is organized into regions and zones, for example:

- United Kingdom

- Multi-region (network)

- Region (subnetwork)

- Zone

- Region (subnetwork)

- Multi-region (network)

A region is a specific geographical location where you can run your resources. There are usually a few zones in a region. A zone is the deployment area for resources. All the zones within a region have a fast network connecting among them. Think of the zone as a single failure domain within a region. A few Google Cloud Services support placing resources in what we call a multi-region. The PoPs (Point of Presences) are where Google’s network is connected to the rest of the Internet.

Virtual Private Cloud (VPC)

VPC is Google’s managed networking functionality for your cloud platform resources. Its fundamental components include projects, networks, subnetworks, IP addresses, routes, and firewall rules.

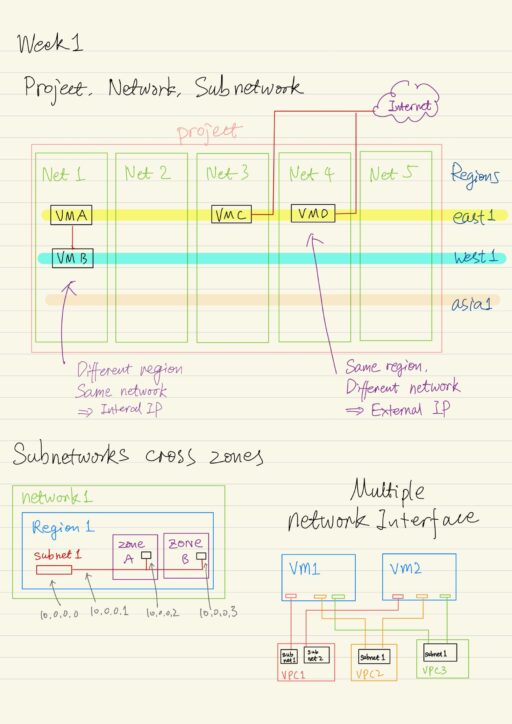

Projects, Networks, and Subnetworks

A project is a key organizer of infrastructure resources in GCP.. It associates objects and services with billing, it can contains (up to 5) networks, which can be shared or peered. Every project is provided with a default VPC network with predefined subnets and firewall rules.

A network does not have IP ranges but are simply a construct of all of the individual IP addresses and services within that network. Networks are global, spanning all available regions across the world. So you can have a network that literally exists anywhere in the world. Inside a network, you can segregate your resources with regional sub networks. A subnetwork is allocated for each region with non-overlapping CIDR blocks.

There are 3 different types of networks:

- Default

- Every project

- One subnetwork per region

- Default firewall rules

- Auto

- One subnetwork per region

- Regional IP allocation

- Fixed

/20subnetwork per region, expandable up to/16

- All of subnetworks fit with in the 10.128.0.0/9 CIDR block

- Custom

- Regional IP allocation

- Does not automatically create subnetworks

- Full control over subnetworks and IP ranges

You can convert an auto mode network to a custom mode network to take advantage of the control that custom mode networks provide. However, this conversion is one way.

Because VM instances within a VPC network can communicate privately on a global scale, a single VPN can securely connect your on-premises network to GCP network.

Subnetworks work on a regional scale, so subnetworks can cross zones. A subnetwork is simply an IP address range, and you can use IP addresses within that range:

x.x.x.0, andx.x.x.1are reserved for the subnet and subnet gateway respectively.x.x.x.2~ are assigned to virtual machines, etc.- Second to last and the last addresses are reserved as broadcast address.

A single IP firewall rule can be applied to many virtual machines in the same subnetwork, even though they are in different zones.

Google cloud VPC let us increase the IP space of any subnets without any shutdown or downtime.

- New subnet must not overlap with other subnets in the same VPC network in any region.

- New subnet must stay inside the RFC 1918 address space.

- New subnet must be larger than the original. (Prefix length must be smaller)

- Auto mode can be expanded from

/20to/16. - Avoid creating large subnets. Overly large subnets are more likely to cause CIDR collision when using multiple network interfaces and VPC network peering, or when using a VPN or other connection to an on-premise network.

You can also migrate a virtual machine from one network to another. When the VM is connected to more than one network using multiple network interfaces, this process updates one of the interfaces, and leaves the rest in place.

- In the same project

- legacy network ⟹ VPC network

- one VPC network ⟹ another VPC network

- In the same network

- one subnet ⟹ another subnet

- A service project network ⟹ shared network of a shared VPC host project.

In all cases, the VM stays in the region and zone where it was before, only the attached network changes.

IP Addresses

In Google Cloud, each virtual machine can have two IP addresses assigned:

- Internal IP

- Allocated from subnet range by DHCP

- DHCP lease is renewed very 24 hours

- VM name + IP is registered with network-scoped DNS

- External IP (optional)

- Assigned from pool (ephemeral) or reserved (static).

- VM does not know external IP, it is mapped to the internal IP. Running

ifconfigwithin a virtual machine, only internal IP address is return.

Each instance has a host name (the same as instance name) that can be resolved to an internal IP addresses. There’s also an internal fully qualified domain name or FQDN for an instance. For example: hostname.c.projectid.internal.

Internal IP Addresses

If you delete and recreate an instance, the internal IP address can change. However, DNS name always points to a specific instance, no matter what internal IP address is. Each instance has a metadata server provided as part of Compute Engine, that also acts as a DNS resolver for that instance. The metadata server handles all DNS queries for local network resources and routes all other queries to Google’s public DNS servers for public name resolution.

Alias IP ranges let you sign a range of internal IP address as aliases to a virtual machine’s network interface. This is useful if you have multiple services running on a VM and you want to assign a different IP address to each service. In essence, you can configure multiple IP addresses representing Containers or applications hosted in a VM without having to define a separate network interface.

External IP Addresses

Instances with external IP addresses, can allow connections from hosts outside of the project. Public DNS records pointing to instances are not published automatically (admins can publish these using existing DNS servers). DNS zones can be hosted using Cloud DNS.

Cloud DNS is a scalable, reliable, and managed authoritative domain name system or DNS service, running on the same Infrastructure as Google. Cloud DNS translates requests from the main names, like google.com into IP addresses.

Routes

A route is a mapping of an IP range to a destination, it map traffic to destination networks. A route is created when a network is created. Every network has a default route that directs package to destinations that are outside the network. Firewall rules must also allow the packet. A route is created when a subnet is created, enabling VM instances on the same network send traffic directly to each other even across subnets.

The default network as preconfigured firewall rules that allow all instances in a network to talk with each other. You must create rules if you manually create networks.

Each route in the route collections may apply to one or more instances. A compute engine then uses the route collections to create individual readonly routing tables for each instance. A massively scalable virtual router is at the core of each network. Every virtual machine instance in the network is directly connected to this router and all packets leaving a virtual machine are first handled in the router before they are forwarded to the next hop.

Firewall Rules

GCP firewall rules protect your virtual machine instances from unapproved connections, both inbound and outbound known as ingress and egress respectively. Every VPC network functions as a distributed firewall. Firewall rules are applied to the network as a whole, however connections are allowed for denied at the instance level. Firewall exists not only between your instances and other network, but also between individual instances with in the same network. For some reason, if all firewall rules in a network are deleted, there are still implied “deny all ingress” rule and “allow all egress” rule.

| Parameters | Details |

| direction | Inbound connections matched against ingress rules only.Outbound connections matched against egress rules only. |

| source or target | Source (for ingress rules): IP addresses, source tags, or service accounts. Target (for egress rules): one or more ranges of IP addresses, target tags, or service accounts. |

| protocol and port | Any rule can be restricted to apply to specific protocols only, or specific combinations of protocols and ports only. |

| action | To allow or deny. |

| priority | Governs the order in which rules are evaluated, the first matching rule applies. |

| assignment | All rules are assigned to all instances, but you can assign certain rules to certain instances only. |

Hierarchical Firewall Policies

Hierarchical firewall policies let you create and enforce a consistent firewall policy across your organization, folders, projects and VMs. You can assign hierarchical firewall policies to the organization as a whole or to individual folders. These policies contain rules that can explicitly deny or allow connections has to virtual private cloud VPC firewall rules.

In addition, hierarchical firewall policy rules can delegate evaluation to lower level policies or VPC network firewall rules with a go-to-next action. Lower level rules can’t override a rule from a higher place in the resource hierarchy.

Multiple Network Interface

VPC networks are by default isolated. VM instances within a VPC network can communicate among themselves via internal IP addresses as long as firewall rules permit. Internal IP address communication is NOT allowed between networks unless you set up a mechanism such as VPC peering or VPN. Usually communication across networks uses External IP.

Every VM instance in a VPC network has a default network interface. You can create additional network interfaces attached to VMs through network interface controllers or NICs. Multiple network interfaces enable you to create configurations in which an instance connects directly to several VPC networks.

When an internal DNS query is made with the instance host name, it resolves to the primary interface or nic0 of that instance. If the nic0 interface of the instance belongs to a VPC network different from the VPC network of the instances using the internal DNS query, the query will fail.

Controlling Access to VPC

Cloud Identity and Access Management (IAM) and firewall rules are some of the ways to control access to your VPC network.

Identity & Access Management

IAM is way of identifying “who” can do “what” on “which” resource. IAM focus on “who”.

| Who | A person, a group, or an application. |

| What | Specific privileges, or actions. |

| Which | any Google Cloud services. |

IAM objects include organization, folders, projects, resources, roles and members. They are organized in Cloud IAM resource hierarchy, Cloud IAM allows you to set policies at all of these levels where a policy contains a set of roles and members. If the parent policy is less restrictive, it overwrites a more restrictive policy. It is a best practice to follow the principle of least privilege.

- Organization (your company)

- Folders (your department)

- Projects (a trust boundary)

- Resources (inherit policies from parent)

- Projects (a trust boundary)

- Folders (your department)

There are 5 different types of members:

| Google accounts | Any email address that is associated with a Google Account can be an identity. |

| Service accounts | An account that belongs to your application instead of to an individual user. |

| Google Groups | A named collection of Google Accounts and Service Accounts. |

| Google Workspace Domains | A virtual group, of all the Google Accounts that have been created in an organization’s G Suite account. |

| Cloud Identity Domains | A virtual group of all Google Accounts in an organization. |

There are 3 kinds of roles in Cloud IAM:

- Basic roles (original roles available in Cloud Console)

- Owner (full administrative access)

- Editor (modify and delete access)

- Viewer (read-only access)

- Editor (modify and delete access)

- Billing administrator (manage billing, add or remove admins)

- Owner (full administrative access)

- Predefined roles (granular access to specific Google Cloud resources)

- Any meaningful role contains a collection of permissions, which are classes and methods in the APIs.

- Network related roles:

- Network Viewer (read only access to all networking resources)

- Network Admin (create, modify, delete networking resources, except for firewall rules and SSL certificates)

- Security Admin (create, modify, delete firewall rules and SSL certifcates)

- Custom roles

- You can create a custom role with one or more permissions, and then grant that custom role to users.

Organization Policy

Organization Policy service focus on “what”, it gives you centralized and programmatic control over your organization’s Cloud resources. An organization policy is a configuration of constraints with desired restrictions against a or a list of Google Cloud services.

A constraint defines what behaviors are controlled. It is then applied to a resource hierarchy node as an organization policy, which implements the rule defined in the constraint. The Google Cloud service mapped to that constraint and associated with that resource hierarchy node will then have force the restrictions configured within the organization policy.

Sharing Networks Across Projects

Many organizations commonly deploy multiple isolated projects with multiple VPC networks and subnets. There are two configurations for sharing VPC networks across GCP projects:

| Shared VPC | share a network across several projects in your GCP organization |

| VPC network peering | private communication across projects in the same or different organizations |

Shared VPC: A Centralized Approach

Shared VPC allows an organization to connect resources from multiple projects to a common VPC network. This allows the resources to communicate with each other securely and efficiently using internal IPs from that network. Overall, shared VPC is a centralized approach to multi-project networking because security and network policy occurs in a single designated VPC network.

When you use shared VPC, you designate a project as a host project, and attach one or more other service projects to it. The overall VPC network is called the shared VPC network. Shared VPC makes use of Cloud IAM roles for delegated administration.

| Organization Admin role | 1. The Google Workplace or Cloud Identity super administrators, are the first users who can access the organization. 2. Assign the organization admin role to users. 3. Nominate Shared VPC admins by granting them appropriate project creation and deletion roles, and the compute xpnAdmin role for the organization. |

| Shared VPC Admin role | 1. Enable shared VPC for host project. 2. Attaches service projects to the host project. 3. Delegate access to some or all of these subnets in shared VPC network to service project admins, by granting the compute networkUser role. 4. Shared VPC Admin is usually the project owner for a given host project. |

| Service Project Admin role | 1. At least have the compute instance admin role to the corresponding service project. 2. Service project admins are usually project owners of their own service projects. 3. Create resources in Shared VPC. |

An example would look like this:

- The Organization Admin nominates a Shared VPC Admin.

- The Shared VPC Admin configures a project to be a host project with subnet level permissions.

- The Shared VPC Admin attaches service projects to the host project, gives each project owner the networkUser role for the corresponding subnet.

- Each service project owner can creates VM instances from their service projects in the shared subnets.

Shared VPC Admins have full control over the resources in the host project. They can optionally delegate the Network Admin and Security Admin roles for the host project.

VPC Peering: A Decentralized Approach

VPC network peering allows private RFC 1918 connectivity across two VPC networks regardless of whether they belong to the same project or the same organization. In order for VPC network peering to be established, both admins from both VPC networks have to peer their own network with the other’s network. Then the VPC network peering sessions become active and routes are exchanged. This allows the VM instances to communicate privately using their internal IP addresses.

VPC network peering is a decentralized or distributed approach to multi-project networking because each VPC network may remain under the control of separate administrator groups and maintains its own global firewall and routing tables.

VPC Peering is better than some other solutions like external IP addresses, VPN, etc. VPC Peering does not incur the network latency, security and cost drawbacks. VPC network peering worked with Compute Engine, Kubernetes Engine, and App Engine flexible environments. Each side of a peering association is setup independently. Peering will be active only when the configuration from both side matches.

Routes, firewalls, VPNs, and other traffic management tools are ministered and applied separately in each of the VPC networks. A subnet setup prefix in one peered VPC network cannot overlap with subnets setup prefix in another peer network. So, auto mode VPC networks that only have the default subnets cannot peer. Only directly peered networks can communicate.

| Consideration | Shared VPC | VPC Peering |

| Across organizations | No | Yes |

| Within project | No | Yes |

| Network administration | Centralized | Decentralized |

In Google Cloud, it is possible to setup VPC network peering between two shared VPC networks.

Cloud Load Balancing

Cloud Load Balancing is a fully distributed, software-defined, managed service, it gives you the ability to:

- distribute load balance computer resource in single or multiple regions to meet your high availability of requirements.

- put your resources behind a single Anycast IP address, and

- scale your resources up or down with intelligent auto-scaling.

Google Cloud offers different types of load balancers, which can be divided into 2 categories:

- Gobal (leverages Google Frontends)

- HTTP/HTTPS load balancing

- SSL proxy load balancing

- TCP proxy load balancing

- Regional

- Internal TCP/UDP (uses Andromeda)

- Network TCP/UDP (uses Meglev)

- Internal HTTP/HTTPS load balancers

Managed instance groups

A managed instance group is a collection of identical VM instances that you control as a single entity using an instance template. You can easily update all of the instances in the group by specifying a new template in a rolling update. Managed instance groups can automatically scale the number of instances in the group, when your applications require additional compute resources.

Managed instance groups can work with load balancing services to distribute network traffic to all of the instances in the group. If an instance in the group stops, crashes, or is deleted by an action other than the instance groups commands, the managed instance group automatically recreates the instance so it can resume its processing tasks. The recreated instance uses the same name and the same instance template as the previous instance.

Regional managed instance groups are generally recommended over zonal managed instance groups. Because they allow you to spread applications load across multiple zones rather than confining your application to a single zone or having to manage multiple instance groups across different zones.

In order to create a managed instance group, you first need to create an instance template. The instance group manager then automatically populates the instance group based on the instance template.

Managed instance groups offer auto-scaling capabilities that allow you to automatically add or remove instances from a managed instance group based on increases or decreases in load.

HTTP(S) Load Balancing

HTTP(S) load balancing provides global load balancing for HTTP(S) requests destined for your instances. Your applications are available to your customers at a single Anycast IP address which simplifies your DNS setup. HTTP(S) load balancing balances HTTP and HTTP(S) traffic across multiple backend instances and across multiple regions.

- A Global Forwarding Rule directs requests from the Internet to a Target HTTP proxy.

- The Target HTTP proxy checks each request against an URL Map to determine the appropriate backend services.

- The backend services on the other hand direct each request to the appropriate backend, based on:

- serving capacity

- zone

- instance health

- The backends themselves contain:

- an instance group (contains VMs)

- a balancing mode (tells the load balancing system how to determine “Full Usage”)

- a capacity scalar (an additional control that interacts with the balancing mode setting).

An HTTP(S) load balancer requires at least one signed SSL certificate installed on the target HTTP(S) proxy for the load balancer. The client SSL session terminates at the load balancer, and the HTTP(S) load balancer supports the QUIC transport layer protocol.

Cloud Armor enables you to restrict or allow access to your HTTP(S) load balancer at the edge of the Google Cloud network meaning as close as possible to the user and to malicious traffic.

Cloud CDN

Cloud CDN (Content Delivery Network) uses Google’s globally distributed edge points of presence to cash HTTPS load balanced content close to your users. Cloud CDN caches content at the edges of Google’s network, providing faster delivery of content to your users while reducing serving costs. You can enable Cloud CDN with a simple checkbox when setting up the backend service of HTTPS load balancer.

An example: Suppose an HTTPS load balancer has 2 types of backends with a URL map deciding which backend to send the content to.

- Managed instance groups in us-central1 and asia-east1, used to handle PHP traffic.

- Cloud storage bucket in us-east1, used to servce static content.

Now a user A in San Francisco is first to access a piece of content, which leads to a cache miss in the cache of San Francisco. The cache might attempt to get the content from a nearby cache, say Los Angeles. Otherwise, the request is forwarded to the HTTPS load balancer, which in turn forwards the request to one of your backends. If the content from the backend is cacheable, the cache site in San Francisco can store it for future requests, which are cache hit.

Cache modes control the factors that determine whether Cloud CDN caches your content. Cloud CDN offers three cache modes:

- USE_ORIGIN_HEADERS

- Requires origin responses to set cache directives and valid caching headers.

- CACHE_ALL_STATIC

- Automatically caches static content that does not have the No Store, Private, or No Cache directive.

- FORCE_CACHE_ALL

- Unconditionally caches responses, overriding any cache directive sent by the origin.

SSL Proxy Load Balancing

SSL proxy is a Global Load Balancing service for encrypted non-HTTP traffic. This load balancer terminates user SSL connections at the load balancing layer then balances the connections across your instances using the SSL or TCP protocols. The instances can be in multiple regions and the load balancer automatically directs traffic to the closest region that has capacity. The proxy supports:

- IPv4 and IPv6

- Intelligent routing

- Certificate management

- Security patching

- SSL Policies

TCP Proxy Load Balancing

TCP proxy is a global load balancing service for unencrypted non-HTTP traffic. This load balancer terminates your customers TCP session at the load balancing layer and then forwards the traffic to your virtual machine instances using TCP or SSL. These instances can be in multiple regions and the load balancer automatically directs traffic to the closest region that has capacity. The proxy supports:

- IPv4 and IPv6

- Intelligent routing

- Security patching

Network Load Balancing

Network load balancing is a regional, non-proxy load balancing service. All traffic is passed through the load balancer instead of being proxied and traffic can only be balanced between VM instances that are in the same region (unlike global load balancers). This load balancing service uses forwarding rules to balance the load on your systems based on incoming IP protocol data such as address, port, and protocol type, for example UDP traffic, TCP and SSL traffic on ports that are not supported by TCP proxy and SSL proxy.

The backend of a network load balancer can be a template-based instance group or a target pool resource. A target pool resource defines a group of instances that receive incoming traffic from forwarding rules. When a forwarding rule directs traffic to a target pool, the load balancer picks an instance from these target pools based on the hash of the source IP and port and the destination IP and port.

Internal TCP/UDP Load Balancing

The internal TCP/UDP load balancer is a regional private load balancing service for TCP and UDP based traffic. It is only accessible through the internal IP addresses of virtual machine instances that are in the same region. This often results in lowered latency because all your load balance traffic will stay within Google’s network, making your configuration much simpler.

It uses lightweight load balancing build on top of Andromeda, which is Google’s network virtualization stack. To provide software defined load balancing that directly delivers a traffic from the client instance to a backend instance.

Internal HTTP(S) Load Balancing

The internal HTTP(S) load balancing (based on open source Envoy proxy) is a proxy-based regional layer 7 load balancer. It enables you to run and scale your services behind an internal load balancing IP address, also enables you to support use cases such as the traditional 3 tier web services.

Choosing A Load Balancer

| Load Balancing | Traffic Type | Global/Regional | External/Internal | External ports |

| HTTP(S) | HTTP or HTTPS | Global, IPv4, IPv6 | External | HTTP: 80 or 8080 HTTPS: 443 |

| SSL Proxy | TCP with SSL offload | Global, IPv4, IPv6 | External | 25, 43,110,143, 195, 443, 465, 587, 700, 993, 995, 1883, 5222 |

| TCP Proxy | TCP without SSL offload Does not preserve client IP addresses | Global, IPv4, IPv6 | External | 25, 43,110,143, 195, 443, 465, 587, 700, 993, 995, 1883, 5222 |

| Network TCP/UDP | TCP/UDP without SSL offload Preserves client IP addresses | Regional, IPv4 | External | Any |

| Internal TCP/UDP | TCP or UDP | Regional, IPv4 | Internal | Any |

| Internal HTTP(S) | HTTP or HTTPS | Regional, IPv4 | Internal | HTTP: 80 or 8080 HTTPS: 443 |

My Certificate

For more on Implementing Networks in Google Cloud, please refer to the wonderful course here https://www.coursera.org/learn/networking-gcp-defining-implementing-networks

I am Kesler Zhu, thank you for visiting my website. Check out more course reviews at https://KZHU.ai