Generative models are a kind of statistical model that aims to learn the underlying data distribution itself. If a generative model is able to capture the underlying distribution of the data well, then it’s able to produce new instances that could plausibly have come from the same dataset. You could use for anomaly detection, telling you whether a given instance…

Category: Python

TensorFlow: Probabilistic Deep Learning Models

Unfortunately, deep learning models aren’t always accurate, especially when asked to make predictions on new data points that are dissimilar to the data that they were trained on. The insight here is that it’s important to models to be able to assign higher levels of uncertainty to incorrect predictions. We want our deep learning models to know what they don’t know. The probabilistic…

Distribution Objects in TensorFlow Probability

We’ll be making extensive use of the TensorFlow Probability library to help us develop probabilistic deep learning models. The distribution objects from the library are the vital building blocks because they capture the essential operations on probability distributions. We are going to use them when building probabilistic deep learning models in TensorFlow. Univariate Distributions Within the tfp…

My #99 course certificate from Coursera

Customising Your Models with TensorFlow 2Imperial College London I highly recommend this course to everyone who is willing to be using TensorFlow 2. I have been using TensorFlow for years, but I still learnec a lot from it. One caveat is that this course actually is not an easy one, it won’t teach your what…

Customizing Models, Layers and Training Loops

Subclassing Models The model subclassing and custom layers give you even more control over how the model is constructed, and can be thought of as an even lower level API than the functional API. However with more flexibility comes more opportunity for bugs. In order to use model subclassing, we first import the Model class…

Sequential Data and Recurrent Neural Networks

Sequential data is data that has a natural sequential structure built into it, like text data or audio data. There are various network architectures and layers that we can use to make predictions from sequence data. However sequence data is often unstructured and not as uniform as other datasets. Preprocessing Sequential Data Each sequence example…

Keras and Tensorflow Datasets

Data pipelines are for loading, transforming, and filtering a wide range of different data for use in your models. Broadly speaking there are two ways to handle our data pipeline: These two modules together offer some powerful methods for managing data and building an effective workflow. Keras Datasets The Keras datasets give us a really…

The Keras Functional API

The reason you might want to use the Functional API (instead of the Sequential API) is if you need more flexibility. For example, you are using: multiple inputs and outputs in a multitask learning model, or conditioning variables. complicated non-linear topology with layers feeding to multiple other layers. With the Sequential API, we didn’t have…

My #77 course certificate from Coursera

Understanding and Visualizing Data with PythonUniversity of Michigan This is a fantastic statistics course taught in the setting of Python programming language. It astonishes me that the professor did a cartwheel at the beginning, very impressive. The course mainly focus on the fundamentals of statistics. After the lecture of concepts, there are hands-on videos tutoring…

Probability, Sampling & Inference

In the 1930s, Jerzy Nayman and others made some very important breakthroughs in this area and his work enabled researchers to use random sampling as a technique to measure populations. It means we did not have to measure every single unit in the population to make statements about the population (inference). The first important step…

Visualizing Statistical Data

Statistics: Arts and Sciences Statistics is the subject that encompasses all aspects of learning from data. We are talking about tools and methods to allow us to work with data to understand that data. Statisticians apply and develop data analysis methods seeking to understand their properties. Researchers and workers apply and extend statistical methodology. Statistics…

My #67 course certificate from Coursera

Getting started with TensorFlow 2Imperial College London Wow this is a wonderful course on Tensorflow! The professor and lecturers directly teach how to write code to complete certain tasks, piece-by-piece and step-by-step. Upon the moment of completing all programming assignments you would certainly gain Tensorflow skills and be confident in your next real job. You’d…

My #61 course certificate (with Honors) from Coursera

Practical Reinforcement LearningHigher School of Economics I am very proud that I survived and completed this thorny but exciting journey with Honors. I spent nearly 1 month on this very challenging course, which covers enormous amount of knowledge. The lecture videos are all well-prepared, they not only help me consolidate my original understanding, but also…

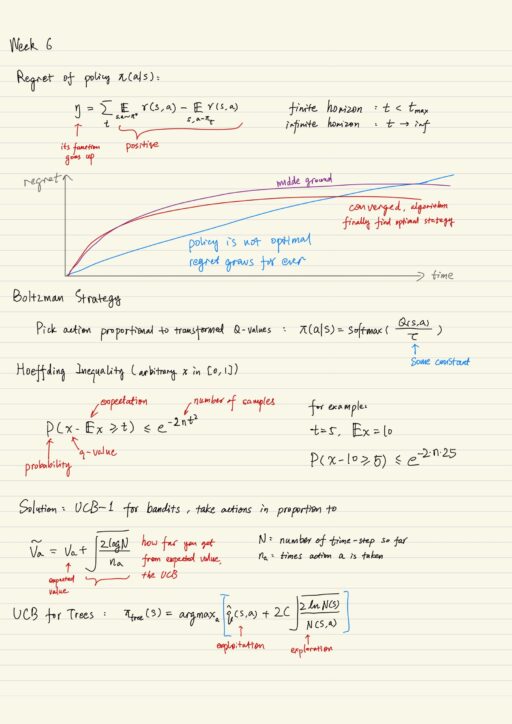

Exploration and Planning in Reinforcement Learning

Exploration is needed to find unknown actions which lead to very large rewards. Most of the reinforcement learning algorithms share one problem: they learn by trying different actions and seeing which works better. We can use a few made-up heuristics (e.g. epsilon-greedy exploration) to mitigate the problem and speed up the learning process. Multi-armed bandits…

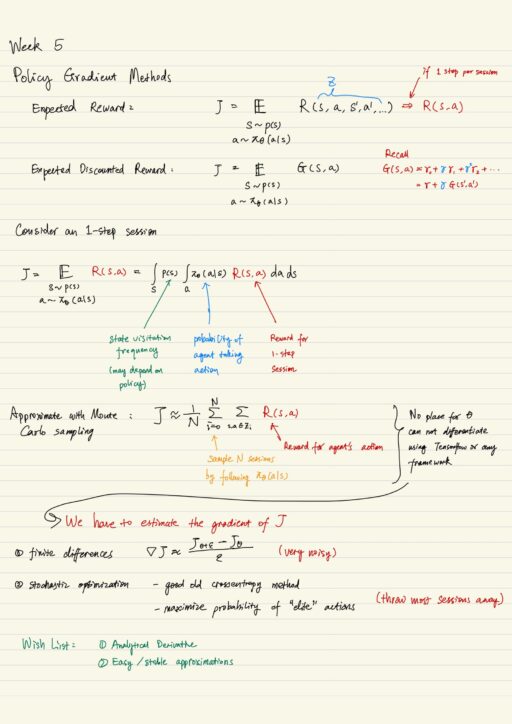

Reinforcement Learning: Policy Gradient Methods

The problems of value-based methods The idea behind value-based reinforcement learning (say, Q-learning) is to find an optimal action, in a state, based on how much discounted reward you will get, by following a policy. The first problem here is value-based methods do not explicit learn “what to do”, instead it learns “what kind of…