Two transport layer protocols User Datagram Program (UDP) and Transmission Control Protocol (TCP) are built upon the best effort IP service.

User Datagram Protocol (UDP)

User Datagram Protocol is unreliable and connectionless, since there is no handshaking and no connection state. Beyond the Internet Protocol services, which know how to deliver a package to a host, UDP is a very simple protocol that provides only two additional services:

- De-multiplexing enables sharing of IP datagram service

- Error checking on data

UDP has low header overhead, but there is neither flow control, error control, nor congestion control. So UDP datagram is easy to get lost or out-of-order. UDP is usually used in applications such as multimedia streaming (RTP), network services DNS, RIP, SNMP, etc.

UDP datagram is simple, including a few fields:

Source portidentifies the particular application in the source host to receive replies.Destination portallows the UDP module to de-multiplex datagrams to the correct application in the host.UDP lengthUDP checksumdetects end-to-end errors.

Client ports are ephemeral, server ports are well-known. Port 0-255 are reserved for well-known applications, 256-1023 are reserved for less well-known applications, 1024-65536 are for ephemeral client ports.

Transmission Control Protocol (TCP)

Transmission Control Protocol provides connection-oriented, reliable and in-sequence byte stream services. It enables error control, flow control and the congestion control. Many applications uses TCP because it offers reliable services, and error detection and retransmission are enforced in TCP.

A TCP connection is specified by a 4-tuple: (Source IP, Source port, Destination IP, Destination port), a host can support multiple connection simultaneously. TCP doesn’t presume message boundaries and treats the data received from the application layer as a byte-stream, TCP groups the bytes into segments and transmit segments for efficiency. For instance:

Application Application

↓ Write 45 bytes ↑

↓ Write 15 bytes Read 40 bytes ↑

↓ Write 20 bytes Read 40 bytes ↑

TCP buffer - - - - - - - segments - - - - - - - TCP buffer

\ - - ACKs, sequence, window size - - - /TCP flow control function is implemented through an advertised Window size field in the TCP segment header of the segments that travel in the reverse direction. The Window size field informs the transmitter the number of bytes that can be currently accommodated in the receiver buffer.

Further, TCP enforce a timeout period called maximum segment lifetime (it depends, but usually 2 minutes), to allow the network to clear old segments.

Three-Way Handshake

There are 2 important fields Sequence number and Acknowledgement number, they are used for the TCP three-way handshake.

As TCP was built upon the best effort IP, it is possible that older segments arrive late. TCP deals with this problem by using a 32-bits Sequence number which identifies the position of the first byte of this segment in the sender’s byte stream. Its initial value was selected during connection setup when the flag bit SYN = 1. At any given time, receiver is accepting sequence numbers from a very small window. So the likelihood of accepting an old segment is very small.

The Acknowledgement number identifies a sequence number of the next data byte that the sender expects to receive. This field also indicates that the sender has successfully received all prior data. It is valid if the flag ACK = 1.

In TCP header there are 6 control bits:

| SYN | Establish connection |

| RST | Reset connection |

| ACK | ACK packet flag |

| FIN | Close connection |

| URG | Urgent pointer flag |

| PSH | Override TCP buffering |

Before any host can send data, a connection must be established. TCP establish the connection using a three-way handshake protocol. This figure shows the 3-way handshake procedure and read write operations with BSD socket function calls:

Host A Host B

| | socket

| | bind

| | listen

socket | | accept (blocks)

connect (blocks) | - - - - SYN, Seq# = x - - - → |

| |

connect returns | ← - - - SYN, Seq# = y - - - - |

| ACK, Ack# = x + 1 |

| |

| - - - - Seq# = x + 1 - - - → | accept returns

| ACK, Ack# = y + 1 | read (blocks)

| |

write | - - - Request message - - - - → | read returns

read (blocks) | |

| |

read returns | ← - - - Reply message - - - - - | write

| | read (blocks)

↓ ↓- Host A randomly generates an initial sequence number

Seq# = xand sends a connection request withSYNbit to Host B. - Host B acknowledges the request from Host A by sending back

SYNbit and a newSeq# = yACKbit,Ack# = x + 1indicating the expected byte to receive

- Host A acknowledges the request from Host B by sending

ACKbit,Ack# = y + 1Seq# = x + 1

Upon the receipt on Host B, the connection is established. During the connection establishment process, if either host decides to refuse a connection request, it can send a reset segment. The three-way handshake protocol can ensure that both hosts agree on their initial sequence numbers.

Graceful Close

Each host of the connection could terminate the TCP connection independently. When a host completes transmission of its data, and wants to terminate the connection, it can sends a segment with the FIN bit set. On the other side, when the other host received the FIN segment, TCP will inform its application.

Host A Host B

| |

| - - - FIN, Seq# = 5086 - - - → |

| |

| ← - - - - ACK = 5087 - - - - - |

| (Host B to A is still open) |

| |

| ← - - - - ACK = 5087 - - - - - |

| Data (150 bytes), Seq# = 303 |

| |

| - - - - ACK = 453 - - - - - → |

| (Host A enters time-wait state) |

| |

| ← - - - - ACK = 5087 - - - - - |

| FIN, Seq# = 453 |

| |

| - - - - ACK = 454 - - - - - → |

↓ ↓Flow Control

After the connection is established, TCP uses a selective repeat ARQ protocol, to provide a reliable data transfer, with positive acknowledgement implemented by a sliding window, which is based on bytes, instead of packets. TCP can also apply flow control over a connection by dynamically advertising the Window size field.

Connection Management

The initial sequence number (ISN) is used to protect against segments from previous connections, since segments may circulate in the network and arrive at a much later time. A local clock is used to select the initial sequence number.

Time used to go through a full cycle should be greater than the Maximum Segment Lifetime (MSL) which is typically 120 seconds. But this will be a wraparound problem on high bandwidth networks. The problem can be fixed by introducing a 64-bit sequence number by combining the 32-bit timestamp with a 32-bit sequence number.

Congestion Control

If the senders are too aggressive by sending too many packets, the network may experience congestion. But if the senders are too conservative, the network will be under utilized. Ideally, the objective of TCP congestion control is to have each sender transmit just the right amount data to keep the network saturated but not overloaded.

Congestion occurs when total arrival rate from all packet flows exceeds the outgoing bandwidth R of a router over a period of time. Buffers at multiplexer will fill and packets will get lost. In general there are 3 phases of congestion behavior:

- Light traffic, when arrival rate is much smaller than

R, delay is low, the router can accommodate more. - Knee stage, when arrival rate approaches

R, delay increases rapidly, throughput begins to saturate. - Congestion collapse, when arrival rate is greater than

R, packets experience larger delay and loss, effective throughput drops significantly.

TCP protocol defines a congestion window, which specifies the maximum number of bytes that a sender is allowed to transmit without triggering congestion. In TCP header, this effective window is the minimum of the congestion window and advertised window.

But the problem is the sender doesn’t know what its fair share of available bandwidth should be. The solution is probe and adapt to available network bandwidth dynamically:

- Senders probe the network by increasing the congestion window

- When the congestion is detected, senders reduce rate

- Ideally, sending rate stabilizes near the optimal point

Mobile IP Routing

Mobile networking is becoming increasingly important as portable devices are so prevalent. The link between the portable device and a fixed communication network is usually wireless. IP address specifies point of attachment to the internet. The problem is changing IP address at run time will terminate all connections and sessions.

One requirement in Mobile IP that a mobile host must continuously use its permanent IP address even as it roams to another area. A simple mobile IP solution (RFC 2002) is designed to deal with this problem, where devices can change point of attachment while retaining IP address and maintaining communications.

A host agent keeps track of locations of each mobile host in its network. Home agent periodically announce its presence, it manages all mobile hosts in its Home network that use the same address prefix.

[Home network] - - {Internet} - - [Foreign network]

↑

[Home agent]

↑

[Mobile host]When a mobile host moves to a Foreign network, the mobile host obtains a Care-of-Address from the Foreign agent and registers its new address with the Home agent, then Mobile IP routing happens.

[Home network] - - {Internet} - - [Foreign network]

↓ register ↑

↓ new address ↑

[Home agent] [Foreign agent]

↑

[Mobile host]Mobile IP routing are arranged like this:

- When Mobile host is in Home network, a Correspondent host can send packets as usual. The packets will be intercepted by the Mobile host’s Home agent.

- When the Mobile host is relocated in the Foreign network, the Home agent simply forwards packets to its Foreign agent, which then relay the packets to the Mobile host.

- By providing a tunnel between the Home agent and as a Foreign agent.

- Home agent uses IP-to-IP encapsulation

- The mobile host sends packet to the Correspondent host as usual.

[Correspondent host]

↓ ↑

← ↓ ↑ ←

[Home network] - - {Internet} - - [Foreign network]

↓ ⥣ ➾ ➾ ⥥ ↑

[Home agent] [Foreign agent]

⥥ ↑

[Mobile host]Multicast Routing

Unicast routing assumes a given source transmits its packets to a single destination. However in some circumstances, such as teleconferencing, a source may want to send its packets to multiple destinations simultaneously. This is multicast routing.

One important approach that is used in multicast backbone is called Reverse-Path Multicasting (RPM). The easiest way to understand RPM is by considering a simpler approach called Reverse-Path Broadcasting (RPB), in which a set of shortest paths to a node forms a shortest path tree that spans the network.

Assuming each router knows current shortest path to the source S: Upon receipt of a multicast packet, router records the packet’s source address and the port it arrived:

- If shortest path to S is through the same port, router forwards the packet to all other ports.

- Else, router drops the packet.

This approach requires that the shortest path from the source to a given router must be the same as the shortest path from the router back to the source. The advantage of this approach is loops are suppressed, each packet is forwarded by a router exactly once.

The Internet Group Management Protocol (IGMP) allows a host to signal its multicast group membership to its attached router. Each multicast router periodically sends an IGMP query message to check whether there are hosts belonging to multicast groups. Routers determine which multicast groups are associated with a certain port. Routers only forward packets on ports that have hosts belonging to the multicast group.

RPM relies on IGMP to identify multicast group memberships. RPM is an enhancement of Truncated RPB. RPM forwards a multicast packet only to a router that will lead to a leaf router with group members.

OpenFlow, SDN, NFV

OpenFlow is an open standard to deploy innovative protocols in production networks. It provides a standardized hook to allow us to run experiments, without requiring vendors to expose the internal workings of the network devices. It is now a standard communication interface between the control and the forwarding layers of an Software Defined Network (SDN) architecture.

In a classical router or switch, the data path and the control path occur on the same device. An OpenFlow switch separates these two functions. The data path portion still resides on the switch, while high level routing decisions are moved to a separate controller, typically a standard server. The OpenFlow protocol is used as a communication path between:

| Control layer | Handles centralized intelligence for simplified provisioning, optimized performance and granularity of policy management. |

| Infrastructure layer | Consisting of routers and switches |

Software-Defined Network, SDN, is a new technology that was designed to make a production network more agile and flexible. Production networks are often quite slow to change and dedicated to single services. With software-defined networking we can create a network that handles many different services in a dynamic fashion, allowing us to consolidate multiple services into one common infrastructure.

In 2012, the concept on Network Function Virtualization (NFV) was defined, both SDN and NFV complement each other. Network Function Virtualization is a consolidation of different network functions within a virtual server rather than deploying different hardware for different network function.

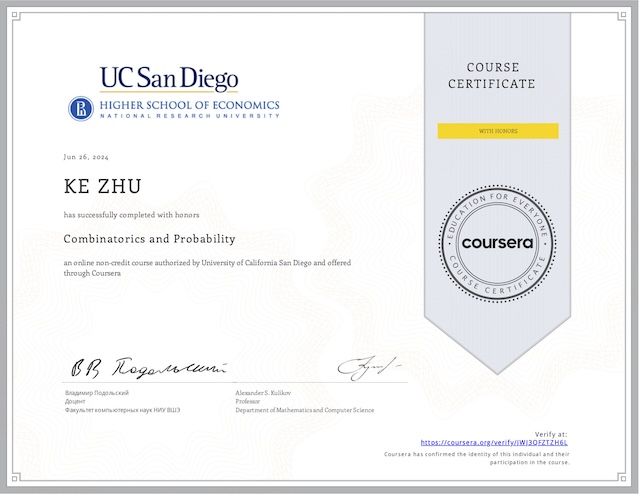

My Certificate

For more on Introduction to Transport Layer Protocols, please refer to the wonderful course here https://www.coursera.org/learn/tcp-ip-advanced

Related Quick Recap

I am Kesler Zhu, thank you for visiting my website. Check out more course reviews at https://KZHU.ai