How to define virtual reality? Different people has different experiences. In order to describe these experiences, we often use terms such as immersive, engaging, and interactive.

The user is effectively immersed in a responsive virtual world.

Fred Brooks

VR Hardware

This implies the user has dynamic control of their viewpoint, which is something at the heart of any VR display system. Essentially, 3 things make VR more immersive:

- 3D stereovision

- User dynamic control of viewpoint

- Surrounding experience

Displays

CAVE refers to a Cave Automatic Virtual Environment. It normally looks like an empty room with at least four display walls: 3 around you plus the floor. There are projectors behind the walls to project high resolution images. In order to perceive 3D stereo vision, the user would wear a pair of shutter glasses. At any given time, the user only sees through one of their glasses. The displays are synced to the shutter glasses, so they will produce images that are for the correct eye at the correct moment to produce the illusion of 3D stereo vision.

The dynamic control of view point is achieved by the head tracking device (on top of the shutter glasses), and updating the display in realtime according to the view point of the user. The drawback is that the view point is controlled by only one user. For other users, the display could feel a bit distorted.

HMD refers to Head Mounted Devices. Displays are usually just in front of the users eyes. It is much portable, flexible and easy to use, but with lower resolution.

| CAVE | HMD | |

| Device | large dedicated space | flexible and portable |

| Display | high resolution (need powerful machines) | low resolution |

| User | single | single |

Head Tracking

We track users head in order to update the displays according to the user’s view point (head’s rotation and position). This must be done quickly to maintain immersion. In CAVE, there are usually accelerometer or pyrometer built on the user’s shutter glasses for tracking users head’s rotation.

For position tracking, external optical tracking devices are normally used. In the CAVE, this can be several ceiling-mounted infrared cameras pointing at a user, so that they can calculate the position of the user and feed the data to the machine, which then updates the displays accordingly.

The same applies to HMD (Head Mounted Devices) head-tracking. However the external infrared sensor is mounted in front of the user, and the range of capturing depends on how the sensor is set up.

Not all devices are built with position tracking. So you won’t be able to move around in VR. A controller like touchpad or joystick might solve the problem but it won’t be as natural, and you won’t have a good sense of scale in VR without this position tracking.

Controllers

What users hold in their hands is something called a VR controller (also called a wand). In VR, you will be interacting with objects using these wands. In order to use the wand to interact with objects in 3D in a realistic way, we should track the wand’s rotation and position. This is done exactly the same way as head tracking.

Wand is also used for navigation. Basically we can use wand to walk around in 3D world without moving our position. This enables users to explore a virtual world that is bigger than the physical space they are actually in.

Choosing VR Devices

There are a few ways that the VR applications can reach targeted users:

- Mass market.

- Upload to the internet, users download and try on their own devices.

- Mobile VR.

- Exhibition to reach clients.

- High-end HMDs.

- Clients come to you directly.

- VR for therapy. Integrated simulation solution for trainings.

- High-end HMDs or a bespoke CAVE.

Factors to consider, here are some example:

| Catetory | Cost | Tracking | Brand | Resolution | Refresh rate | Weight | Controller |

| Mobile | < $500 | Rot | Samsung | 1280×1440 | 60Hz | 318g | Rot |

| Console | ~ $1000 | Pos+Rot | PlayStation | 960×1080 | 120Hz | 610g | Rot, Pos, Vibration |

| High-end HMDs | > $2000 | Pos+Rot | Oculus | 1080×1200 | 90Hz | 470g | Rot, Pos, Vibration |

Technical Framework

VR can be divided into 3 technical components, as a framework to think about VR applications:

- Display

- Content

- Interaction

When it comes to the content, there are 2 different types: 360 Video VR and Model-based VR (CGI).

| 360 Video | Everything is captured from real life with cameras. The information is stored in the formate of images or pixels. Content is highly realistic, capturing is fast, but you are stuck with what you captured. |

| Model-based VR (CGI) | Create and animate 3D models you need, then capture these models with virtual cameras. You get computer generated images as well as all the models and animations you created. Go beyond real life. The 3D world can be programmed to respond to your actions in real time. |

Understanding which type of VR content you are dealing with will help you make decisions about which VR display and VR interaction to have.

| 360 Video | Best viewed using mobile VR (with rotation tracking only). Not interactive in the same way as model-based VR. |

| Model-based VR (CGI) | Best viewed on a high-end VR display (with both rotation and position tracking). Support real time interaction with VR controllers. |

Avatar

HMDs have a problem. The moment when users look down or reach forward with hands, the users will realize that something is missing, because the brain automatically looks for torso, which is no longer there.

There is a simple fix to this problem, you just need to give the user a self avatar. If you manage to put it where the user expect their torso to be, this will have a very strong effect. It will give user a Proprioception Match. Proprioception means someone’s overall sense of the relative position of their own parts of the torso.

This enables us to do something even more interesting, we can easily change the appearance and shape of our virtual torso.

Application Areas

A couple of example of VR applications:

- Sports

- News and documentary films

- Scientific data visualization

- Medical training

- Physical Rehabilitation and psychotherapy

Level of Immersion

In an ideal VR system, it would display in all sensory systems, which includes various sensory operators: vision, sound, as well as haptics (feeling of touch and force feedback), smell and taste.

Immersion is the description of a system, it is a technical description of what a system can deliver. In general, one system is more immersive than another, if the first can be used to simulate the second.

For example for a head-mounted display, if it could track both rotations and translations (six degrees of freedom), then it is more immersive than another display that could only track rotations, because the second display is a subset of the first. This is important because it gives you qualitatively different experience and different information.

Head-mounted display with 6 degrees of freedom tracking is more immersive than the CAVE, because we could use the former to simulate the whole process of going in a CAVE and being in a CAVE.

Sensorimotor Contingency

Sensorimotor contingency is part of the theory of active vision that describes how we use our torsos to perceive. So basically we have a set of implicit rules that we use to perceive the world across all the different senses.

It is important to notice what affordance does the VR system give you for perception. If an HMD offers 6 degrees of freedom, users will have sensorimotor contingencies that are more or less the same like real life.

But other than the interaction, sensorimotor contingency is the combination of how you perceive and what you perceive. The more that how it matches the reality, the more what you perceive matches what you would expect to see in reality through the act of perception.

If the system affords the natural sensorimotor contingency, so that user is perceiving in the virtual world much the same way as I do in the real world, the simplest hypothesis for the brain to make out of this is “the virtual world is where you are”.

The Psychology of VR: Three Illusions

Since the early 90’s, people have been using the concept of “Presence” to describe the feeling of being in the place. The term “place illusion” is used to describe this feeling, even though you know you are not there. The place illusion can occur even if nothing is happening in the environment.

The second illusion is called “plausibility illusion” which is how real do I take the events to be. It is the illusion that the events that I am perceiving and engaged in are really happening.

The third illusion is “embodiment illusion”, that have quite big consequence for your own attitudes, behaviors and so on.

Place Illusion

Place illusion is the illusion of being in a place even though you know you are not there. You usually are not marveling at the fact that you are in a place because you are always in a place. But in virtual reality there is a wow factor that gives me the illusion that I am in a place which is different from where I really am.

An example is the Bystander problem in Social Psychology: you are in somewhere and suddenly two other people start fighting, one of them is a victim and one of them is a perpetrator, and the perpetrator is attacking the victim. What do you do? A theory says that “the more other people are there, the less likely is that any individual will do anything to help.” In virtual reality we can create a situation where that violent event or some event like that happens, because of Place Illusion, people do have the illusion that they are in reality.

Another example would be the fear of public speaking in clinical psychology. So if a clinical psychologist wants to help someone to be exposed to audiences, now with virtual audience, therapist can work with patients to conquer the anxiety, even through the patient knows it is not true.

Place Illusion vs Immersion

Recall immersion is a description of what system offers. Place Illusion is to do with the response of the individual. So the question is “does the level of immersion cause place illusion?” No. Immersion actually provides a framework (or a basis) in which the Place Illusion can occur. Different types of immersive system can give rise to the possibility of different types of place illusion. Furthermore, different people with different experience of VR will have completely different attitude looking for things that don’t work. So, immersion does not cause place illusion, it allows the possibility of it, and there’s different kinds of place illusion that are made possible by different levels of immersive system.

Break of Presence

Because place illusion (presence) is dependent on sensorimotor contingencies (given by the level of the immersion), the glitches causes temporary breaks of place illusion, but the place illusion will come back again. How many breaks in presence people have as a way of measuring the overall level of place illusion or presence in the environment.

But when plausibility breaks, it does not come back again. Because plausibility is more cognitive and place illusion is more perceptual.

Plausibility Illusion

Plausibility Illusion is the illusion not that you are in the place, but you can feel what is happening is really happening, even though you know it is not. In virtual reality if virtual characters just ignore you, they never responds (because the program had no awareness), this is a failure of plausibility. There are 3 contributor to the plausible illusion:

- Events occur in relation to you personally.

- The virtual world responds to you.

- Credibility. When you simulate something that could happen in real life, you’d better find out what people’s expectations are, and conform to that.

When you get Place Illusion and Plausibility Illusion together, you will tend to respond like you do in real life. We could measure how successful a virtual environment is, if people respond appropriately to the situation that they’re encountering.

Embodiment Illusion

First of all there are two interesting experiments in the history:

- The Pinocchio Illusion was reviewed in a very famous paper by James Lackner in 1988, who reviewed a whole number of different proprioceptive and multi-sensory illusions. The experiment participant will feel they have a long nose like Pinocchio.

- The rubber hand illusion was first written about in a one-page paper by Botvinick and Cohen in Nature, 1998. The experiment participant will treat a rubber hand as their own.

Psychological Effects

Both experiment show how easy it is for people to experience a change in the sensation of parts of their torso. There is a match between what we see and what we feel on our skin, creating a sense of torso. A nice way to think about it is in a framework where the brain is constantly trying to predict the sensory stimuli and when it is coming in, work out what’s going on in the real world.

The brain actually does not have access to knowing what’s part of our torsos or not by some sort of internal thing. It can only rely on the signals it is getting from the different senses. In the Pinocchio illusion and the rubber hand illusion, the experiment participant match up:

- the incoming tactile sensations on the skin, with

- the visual sensations coming from eyes.

In the real world, that is going to be a pretty good indicator of what’s part of me and what is not.

In the illusions above, we created very special situation in which tactile sensations and visual sensations match up, the objects being touched are totally different. The participants create weird correlations in the signals coming into the brain, the brain think the object it sees is part of torso.

Visual-Tactile and Virtual-Motor Synchrony

With virtual reality, we could do more than rubber hand illusion. After a few minutes of the synchronous tapping on both real hand and virtual hand, we could program the virtual hand to do some movement. Even though the participant is asked to keep their real hand still, we still could found there are increased electrical activity in the real hand, and this correlated with the participant’s strength of illusion. The more they had that illusion that the virtual hand is their own hand, the more that they would move when the virtual hand itself would move. If the participant had the asynchronous condition, they did not have the same level of muscle movement. This is triggered by Virtual-Tactile Synchrony.

Virtual-Motor Synchrony is when you move your torso and you see the virtual torso move synchronously. Seeing a virtual torso when you look down, this first person perspective view of a life-size virtual torso visually substituting your own is already enough to give you not just embodiment illusion but place illusion.

With model-based VR, we could do even more. You put on a head mounted display which is tracked. When you look down towards your torso, you could have a virtual torso substituting your real torso. The way we do virtual tactile today is to put some vibrators at the different parts of the torso. When a virtual object touches a part of your virtual torso, we fire the vibrator on your real torso synchronously.

Changes in Attitude, Behaviors, and Cognition

The illusion can actually be used to change people’s attitude, behaviors, and cognition. We could take out adults and put them in a child torso, and ask them to measure the size of objects. Children have different proportions of torso than adults do. Those in child torso overestimated more than double those who had been in adult torso. Simply being in the torso of child has altered their perception not just because of the size, but because of the shape.

In the Implicit Association Test, which ask people to categorize people very rapidly aspects of their own identity. Those in child torso categorize themselves far more as childlike than those who’d been in the adult torso. Those in child torso realized how scary it was to be this little child when they are with big parents. They changed their behavior when talking to their own child – getting down on the knees and brought themselves to the same height as the child. The whole experiments has huge impact on people’s sense of empathy with their children.

Racial bias can be also measured using the Implicit Association Test. If you are a white, and more if you associate “white face with good attributes’ and “black face with bad attributes” FASTER than “white face with bad attributes” and “black face with good attributes”, then it is indicating a bias. A white people who spent a few minutes embodied in the black torso, even a week later after having been exposed in a black torso, has less racial bias than it was before.

Also when people interact with virtual partner, they would unconsciously mimic the torso posture and behavior of that virtual partner if they had the same virtual skin color. Your virtual identity dominates how your social affiliation work in virtual reality. Unconscious mimicry is usually a sign of social harmony. Your behavior changed subconsciously.

Self-Counseling and Treating Depression

The same idea of embodiment could be used in a more counseling and therapeutic context.

VR can change our perception about ourselves. You usually often give better advices to friends than to yourself. Somehow having the perspective of the other person, you are able to get outside the loop and see things from a different perspective.

VR can help you do self-counseling in this way. You can get embodied in a copy of yourself, and ask question to a friend. Then everything goes wary and you are in the torso of your friend, and hear what you just said to your friend a moment ago. You (now as your friend) can ask question back to yourself. And then you are switched back to your own torso. You can then continue the conversation.

Using this technique also did help people with depression to improve their mood positively.

Challenges in VR

Realism

In a lot of the applications, it is overly important to create realistic looking graphical representations of the real world. Other times, we might want to create an imaginary fantasy world, but we still want the users to somehow understand and connect with that world. Objects have to interact with lighting in a similar way as they do in real life.

Something made from fabric is a perfect diffuse surface, which reflects light evenly and equally in all directions, and product a smooth and even looking reflection. On the other hand, something made of metal is a specular surface, which reflect lights in a certain direction, producing a highlight effect. Diffuse reflection is computationally cheaper to produce. meanwhile specular reflection is a bit more mathematically complicated. The most computationally expensive images to produce are glossy or mirror surfaces. These are surfaces you can see through or surfaces that reflect things around them.

Illumination Realism means how lights are reflected on the 3D objects, this is important in creating a realistic and believable environment. In local illumination, we only consider lights that come directly from the light source. In global illumination, we also consider the inter-reflection between objects. Basically, global illumination creates more realistic environments, but it is more computationally expensive. Sometimes in order to render real-time graphics in VR while maintaining the required high frame rate, we will have to turn off global illumination.

Most game engines nowadays come with a built-in physics engine that takes care of physical simulations. Another powerful animation tool that often comes as part of a game engine is called a particle system. It often used to generate effects, such as smoke, fire, and snow.

Navigation

There are a few ways of navigating in the virtual world:

- Physical navigation, the most natural way.

- Walk-in-place.

- Teleporting, user can change among a few pre-set locations.

- Virtual navigation, with Joystick or pad.

Nausea and Simulation Sickness

Nausea in VR is also called simulation sickness. It’s mainly caused by the conflict between information received in the brain from our vestibular system and our visual system. The best way to avoid simulation sickness is to pay attention to how users could navigate in the virtual environment. Because the displays are very close to your eyes, you might experience eye strain. A frame rate below 90 Hz could cause discomfort as could flashing or high contrast images.

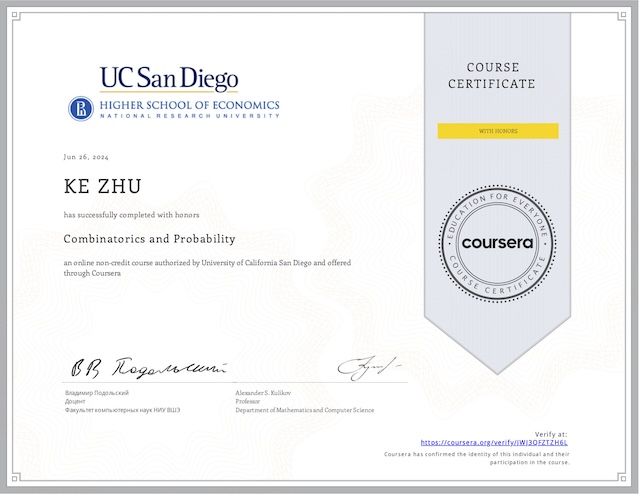

My Certificate

For more on Virtual Reality and Psychology, please refer to the wonderful course here https://www.coursera.org/learn/introduction-virtual-reality

I am Kesler Zhu, thank you for visiting my website. Check out more course reviews at https://KZHU.ai